After deciding that I would use Sun Solaris and its ZFS file system as the foundation for a home fileserver, the next part was to select compatible hardware, as Solaris has fairly limited driver support for hardware.

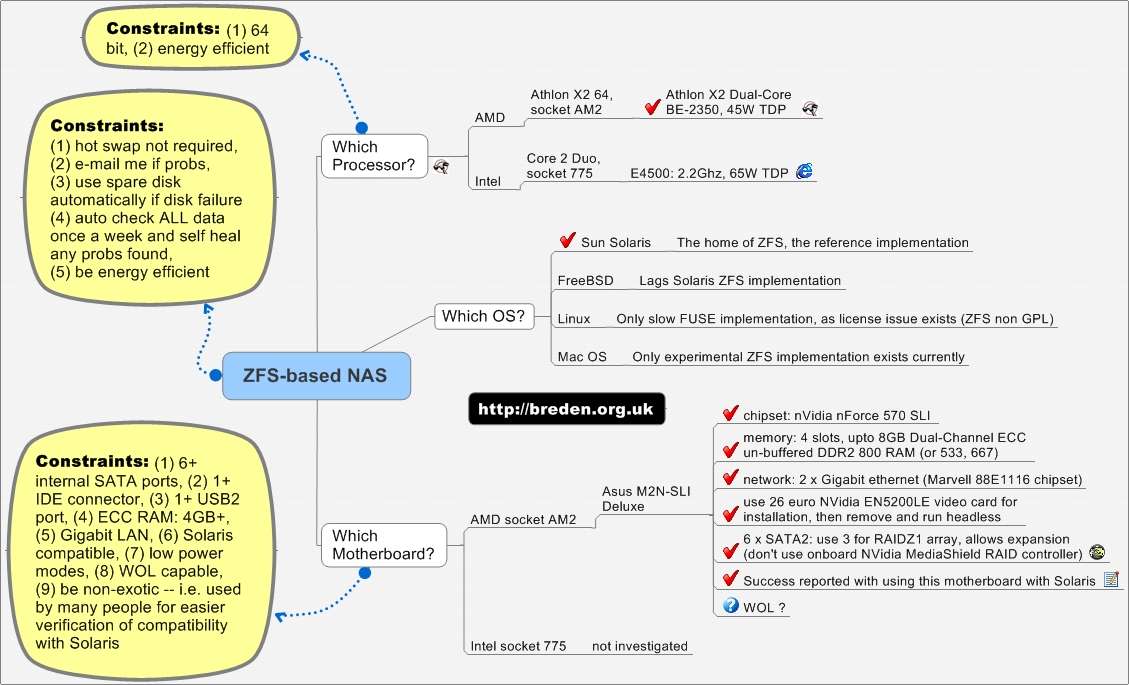

After hunting around on the internet and the Sun Solaris Hardware Compatibility List, I decided on some hardware to make a Solaris/ZFS-based fileserver. Here’s an image that shows the design brief/constraints that were important to me (click it to view it full size) :

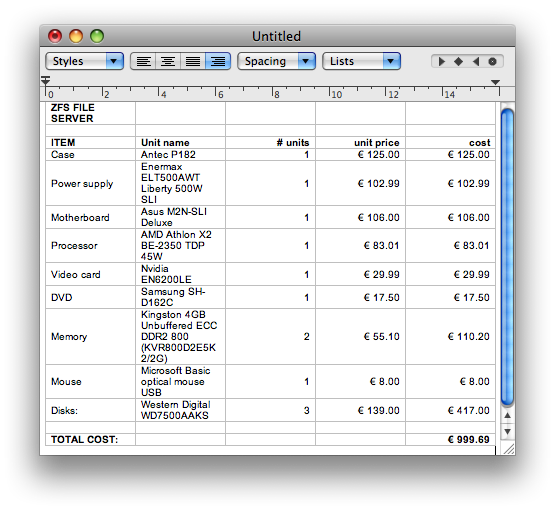

Finally, here is the list of components I finally chose and their prices in Europe around mid-January 2008:

So this choice of components gave a very quiet fileserver, about 1.4 TB of redundant data storage: 2 disks of ~692GB = ~1.4TB for data, plus 1 disk of ~692GB for parity data. It’s incredible that this marketing swindle is allowed to continue, where you always lose a substantial amount of space compared to the amount quoted on the case and in the advertised specs — where is the European Commission on this one, I wonder? I mean, you buy a 750GB disk and end up with 692GB — what a con!

ZFS works best with a 64-bit processor and lots of RAM, the more the better, apparently. I did tests with 2GB of RAM and it worked well, but as RAM is so cheap, I splashed out on 4GB of RAM to ensure I created a 100% kickass fileserver, where I can throw around vast amounts of data, especially video, without the machine sweating too much 😉

Let’s just run through the component list:

- Case: Antec P182: Not the cheapest case, but is superb quality, really quiet, has Antec TriCool fans, lockable front door (kid proof), front has 2 x USB2, 1 x Firewire 400 and audio ports. Inside, there is a 4 x 3.5″ drive bay that has its own wind tunnel chamber for efficient cooling of the 4 drives. Additional 2 x 3.5″ drive bay. All drives have silicone rubber grommets to absorb drive vibrations and prevent transmission to case chassis, making a really quiet machine.

- Power Supply: Enermax ELT500AWT Liberty 500W SLI: Not cheap, but is a high quality, rock solid, stable and efficient power supply, modular so you attach as many supplied cables as you need for drive power to 4-pin molex & SATA power connectors. Has additional SLI power connectors which I don’t use. All supplied power leads are protected from scratches/cuts with a plastic mesh shroud.

- Motherboard: Asus M2N-SLI Deluxe: Superb quality motherboard with 6 SATA ports powered by NVidia nForce 570 SLI chipset, additional 7th onboard SATA port powered by the JMicron JMB363 chipset which also powers an external eSATA port on the motherboard’s IO back panel, 2 x Gigabit ethernet network ports, Firewire 400, 4 x USB2, Audio etc. The motherboard allows re-flashing the BIOS from a USB stick/thumbdrive, which avoids using obsolete floppy drives. Nice!

- Processor: AMD Athlon X2 BE-2350: Chosen for its energy efficiency (45W TDP) and the fact that it’s a dual-core 64 bit processor (one of ZFS’ hardware requirements — 32-bit processors are not recommended for ZFS to work well).

- Memory: Kingston 4GB DDR2 800 ECC RAM (KVR800D2E5K2/2G): This is 2 kits, each containing 2 DIMMs of unbuffered ECC dual-channel memory. I chose ECC RAM as it can detect and correct single bit parity errors and it was only about 10% more expensive than the non-ECC memory, so it’s a bargain. As ZFS is all about end-to-end data integrity, let’s have some good RAM too 🙂

- Disks: Western Digital WD7500AAKS: I got 3 of these 750GB drives to give around 2.1TB of capacity, distributed as ~1.4TB for data and ~0.7TB for parity data (redundancy). The drives have excellent properties for low noise, low vibration, fast read/write speeds and they run fairly cool. I considered getting the Samsung HD753LJ drives but there were reports of variable quality batches, many DOA, some with vibration issues/loud noises (see newegg.com), and somewhere I saw some compatibility issues with NVidia motherboard chipsets. Also the Samsung drives were failing Samsung’s own drive diagnostic tool tests! Scary.

- Video card: Asus EN6200LE: Cheap, energy-efficient, passively cooled (quiet, as heatsink only) video card that works great. Only used for setup and configuration so doesn’t need to be fast — we’re not playing high-speed video games on this machine 🙂

- DVD-ROM: Samsung SH-D162C: Cheap DVD-ROM drive — only used to install Solaris, so no burner required.

I used a mouse & keyboard from my spare parts box to save money. Also, I used an old spare IDE disk (Parallel ATA) as the Solaris boot disk and root filesystem/swap etc, again to save money and make use of otherwise redundant old hardware I had lying around. The beauty of this is that the OS is completely separate from the ZFS data pool and this is important whilst I am experimenting with new Solaris builds as they are released. When a new release is available that gives some required bug fix or new driver functionality, I can simply reinstall the OS without zapping my data. When the new OS has installed and the machine boots, the ZFS data pool is recognised and auto-mounted. Nice!

With all the above hardware installed, with 3 drives for the ZFS data pool and the IDE boot disk running the OS, my power meter shows an idle power usage of around 115 Watts. This is more than I had hoped for, but here, I see that someone says that Solaris can not currently manage dual-core processors for lower power modes (CPU frequency scaling?). The guy says that the same hardware under Linux used 30 Watts less power, so hopefully Sun will fix this ASAP.

Overall, the machine using this hardware seems to run really well and performs well. The only thing that didn’t work under Solaris (SXCE snv_b82+) was the audio, but I don’t care about that at all.

Also the power management doesn’t yet seem to support suspend mode, but I understand Sun have a project underway to sort that out, so one day…

Once Sun also fix the dual-core power management to reduce the power to the dual-core processor when it’s idle, this will help in reducing power consumption too.

In the meantime, this is a great, well-performing machine which handles data in and out of the machine pretty quickly for a relatively cheap, general-purpose fileserver.

It’s possible to save about 300 euros off the above component list cost, by buying a combined case/power supply (- ~150 EUR), for example Antec NSK6580, using a cheaper processor (- ~30 EUR) and installing only 2GB non-ECC RAM (- ~60 EUR), plus the disks dropped 20 EUR each since I bought them (- ~60 EUR).

Now, I’ll move on to the software configuration and some useful Solaris and ZFS commands to help make this system work in the next part.

For more ZFS Home Fileserver articles see here: A Home Fileserver using ZFS. Alternatively, see related articles in the following categories: ZFS, Storage, Fileservers, NAS.

Anyone more familiar with Linux may like Nexenta, http://www.nexenta.org/os/Download

I have blogged about it a bit at http://www.thattommyhall.com/category/opensolaris/

Your hardware choices were similar to mine, though I use the Supermicro AOC-SAT2-MV8 as it has the same Marvell chipset used in thumper. You can even use the “hd” command seen here http://www.infoworld.com/archives/videoTemplate.jsp?Id=1152&type=Screencasts&tag=Storage (though watch out, the guy doing the demo is a bit of a muppet and creates a non redundant pool)

I have not played with the CIFS stuff yet as I am in the process of moving house.

Tom

@Tom Hall:

Nexenta looks like a nice, small distribution that can run off a USB memory stick/thumbdrive and I would like to try it out sometime. I’ll take a look at what you’ve written about Nexenta on your blog. Good luck with your house move!

Yes, I think that a script could have helped the guy get things right in that ZFS demo video 😉

What was the main reason you chose to get the Supermicro SATA card instead of using a multi-SATA connector motherboard like the Asus M2N-SLI Deluxe with 6 SATA connectors? (speed, compatibility, or more disks?)

I went for that card as I knew driver support would be good as Thumper uses the chipset. It also has lights to show activity if you have a hot-swap bay and maybe you want more than 6 drives?

I will soon be filling one of these http://www.vadim.co.uk/Lian-Li+PC-343B+Black+CASE+-oq_NO+PSU-bq_ with some of these http://www.vadim.co.uk/ICY+DOCK+MB-455SPF+-oq_black-bq_

I do worry slightly about electricity bills though.

Wow, Tom, that beast looks like the real Pimpmobile of storage devices — I can just imagine it with lowered suspension, furry dice, fat exhaust pipes and a 2KW sound system 🙂

I think when you switch that bad boy on, your neighbours’ lights are going to blow 🙂

What are you doing there — running the National Weather Computing Centre or something?

And yes, I expect if you fill that thing with drives and run it 24/7, you’ll be looking at a major electric bill. Let me know if you ever get it built and fill it up with drives — a nice pic of the “Kill A Watt” power meter’s display please! 🙂

The Supermicro card looks good though, for large systems.

“I mean, you buy a 750GB disk and end up with 692GB — what a con!”

Actually, they aren’t lying, the public just has their definitions mixed up. GigaByte can mean either _exactly_ 1000^3 bytes or _approximately_ 1024^3 bytes.

So, 750GB is about 698 GiB, which is what your computer is reporting.

http://physics.nist.gov/cuu/Units/binary.html (I realize this is a US standards organization, and you are in the UK)

I promise I’m not a troll. I’m very very interested in your write up on ZFS, and I believe you have convinced me 😉

@Steven: Thanks for that. I was aware of the base 2 and base 10 usages, but it’s interesting which one the marketing departments use though, isn’t it? 😉 And it’s never nice to unwrap a ‘750’ and see only ‘698’ if you know what I mean.

Glad you’ve been convinced about ZFS. Seems like the Sun engineers have done a great job with it. Of course, if it loses all my data in the coming months, I’ll review my opinion, of course 🙂

I’ll be writing more on ZFS soon — watch this space!

I figured you were aware of base 2/10, I just like to make sure I’m thorough. You never know, in IT, when someone knows something or not, so I tend to lean towards the safer side.

It’s unfortunate that the public doesn’t understand it, and I’m certain that the drive manufacturers exploit this intentionally.

ZFS does seem like an amazing piece of software. The more I read, the more I like it. I’ve been wanting to build a NAS device for quite some time, and was originally going to use OpenFiler, but it seems I may end up using Solaris+ZFS to build a sort of “SOHO SAN”.

Thanks for the articles.

“Only used for setup and configuration so doesn?t need to be fast”

Did you pull the video card after installation and run headless? I wonder how much less the total power consumption would be if you did not and now you pulled the card.

Currently, I’m considering a similar setup.

Asus M2N-E

Asus EN6200LE (thanks :))

8GB DDR2

AMD Athlon 64 X2 5000+ Brisbane black edition

Corsair VX450W

4x WD5000AAKS

I’ll plug in a DVD drive to boot and configure. I may even purchase a cheap one should I find it. I’m not happy about the issue with the software not scaling the CPU properly and thus using more watts. I was planning to, essentially, undervolt the 5000+ to eak out a few watt savings. But that might not be noticeable should the software fight against me. 🙁

@Steven: Yes, the drive manufacturers do exploit this.

Yes, from what I’ve read and experienced so far, ZFS is an incredible file system built to be bullet-proof and simple to use. Good luck with building your systems and it’d be great to hear about your experiences. You could also post to Tim Foster’s blog at Sun — he’s got a page called ‘Tales about using ZFS as a home fileserver’: http://blogs.sun.com/timf/entry/tales_about_using_zfs_as

Good luck!

@Rick: I tried pulling the video card but then the system didn’t get very far in it’s boot stage — perhaps not even past the POST. In the BIOS I have it set to halt on all errors, so maybe I need to change that and see if it continues. Running headless would probably save around 10 to 25 Watts, as the EN6200LE is a low-power graphics card, I think — but I can’t find specs on the Asus site.

I used the M2N-E in the backup box running Solaris/ZFS, so I can say that that works, even the audio works with this motherboard. It seems to be a great little motherboard.

Due to the low cost of ECC RAM (10% premium or so), I would be tempted to go for that, and I think the M2N-E will take it. I used ECC with the M2N-SLI Deluxe. Peace of mind at virtually no extra cost 🙂

FYI I recently installed build 85 of SXCE and I’m seeing some reduction in power usage which I think is due to recent changes in the power management within Solaris, but I haven’t verified if this is true. I’ve seen the power drop to around 77W from around 120W when idle after a few minutes. It might be worth trawling the opensolaris.org discussion forums to see what’s going on there. You might find this useful, but I haven’t tried it yet: http://blogs.sun.com/randyf/entry/solaris_suspend_and_resume_how

Good luck and let me know how you get along with building the new machine!

Simon: Very good to hear about the drop in power consumption.That’s a big difference. I won’t start building for another week or so. I’ll post and let you know how it goes. I’m going to do my best to remove the video card and run headless.

As to the suspend/resume issue: I was going to break up my ZFS pools. One pool will probably always be active, the other will most likely be inactive for long periods of time. I plan to use the “green” WD drives. I’m hoping that they will actually spin down. Because the file server will be active most of the time, I don’t see that I’d ever get much use out of suspend/resume. However, thanks for the link. I will keep watching to see what happens on that front.

@Rick: Yes, it would be good to hear how your build goes, and how you managed to make it run headless, and what your power meter shows.

Did you find people who’d had success with the WD GP green drives using Solaris and ZFS?

Simon: No, I haven’t. http://www.wdc.com/en/products/greenpower/technology.asp?language=en makes me think that it won’t be OS specific. And, I guess that part of the spec isn’t that they will actually spin down. It appears the Green rating is because of the features noted above. Maybe I’ll have to see if I can use suspend on those drives…

Anyway, I will tell you how it goes. The initial set of drives won’t be the “green” ones.

Rick: OK, because somewhere I seem to remember someone saying that they had problems with the WD GP Green drives and so had gone back to using WD5000AAKS or WD7500AAKS drives, so it might be worth doing a thorough search before buying them and getting a surprise. I’m sorry that I can’t remember where I read about this though.

The funny thing about those green drives is that WD state them as 7200 RPM drives, but when a strobe test was done on them they discovered that they actually spin at 5400 RPM. I think the reason that WD quote them as 7200 is that they fear they wouldn’t sell so many, as people would expect slower rotational speeds to yield slower data read/write speeds. The drives seem to get very respectable read/write speeds via use of a some clever tricks, which I think they describe on their product description tech pages. The low energy usage is very appealing, but not at the cost of problems, if what I said above is correct — i.e. people getting usage problems. If you track down the source of any possible problems with these drives, I would be interested to hear.

Simon: Everything will arrive in “3-4 days”. Unfortunately, I’ve not upgraded anything in a long while. So I will have to thoroughly test each device as a first step, and RMA anything that’s broken. Not having another set of known-working equipment, I’m slightly nervous. I won’t be able to tell if a problem with the memory is actually a problem with the memory or a problem with the mobo, etc. 🙁 Hopefully, newegg will do me right and there will be no issues. Right now, I’m trying to decide what to install. For starters, I’m going to go with Solaris 10 (8/07). I’m still not fully versed on the OpenSolaris available downloads. Re-reading http://opensolaris.org/os/downloads/ makes me think that none of them are really ready for everyday use as a server. Although, your http://breden.org.uk/2008/03/08/home-fileserver-zfs-setup/ makes it seem like I may want SXDE.

The box I’m building will be used mainly for storage purposes. I’d like to get as close to 100% uptime as possible. I really only need some kind of SMB/CIFS or NFS for file sharing. From all that I’ve read, it appears that SMB/CIFS is the way to go right now. Connections should only be from a windows box. Later in life, they should only come from linux or mac. At that point, I’ll have to verify how much speed is offered by NFS vs SMB. And examine how they both work.

@Rick: What’s your hardware component list? Hopefully all will be fine. If I were you, I would install SXCE (see Getting and installing Solaris above). I’m using build 85 with success, and there’s a build 86 out today which might be good too.

Regarding remote pool access, I would share with CIFS for now, and later when you need it, add NFS access for Linux. CIFS share info is shown on the setup page, I think.

Good luck!

Simon: As listed above (except, I guess this is definitive now as it’s on order and shipping 🙂 )

Asus M2N-E

Asus EN6200LE

8GB DDR2

AMD Athlon 64 X2 5000+ Brisbane black edition

Corsair VX450W

4x WD5000AAKS

I’ll be using a plextor plexwriter DVD to perform the install. I’ll remove it after I’m done installing. I will *probably* remove the video card too… not sure though. We’ll see how it all works out.

You have to love newegg. 🙂 Everything arrived today. Ahead of schedule. I’m unprepared at this point, but I should have info for you by the end of this weekend. I’m crossing my fingers hoping I have no needs for an RMA. … I feel bad with a running commentary on this blog… I should probably just email you if you want to know the results and whatnot.

@Rick: No problem to post here — text is cheap 🙂

Lucky you, it’s great when the box of new goodies has arrived 🙂

Wow, 8GB RAM — that should be fantastic! ZFS *loves* loads of RAM apparently, so it’ll cache up loads of stuff and make common read accesses come straight from RAM across the network (gigabit switch?). If I were you I might leave the video and DVD drive in for a while until you get comfortable with it as you’ll probably find it easier to mess around with it. If you’re going to use SXCE they bring out new versions every 14 days (Fridays), so if you’re waiting for some bug fix, it will be tempting to upgrade fairly frequently and use the DVD to reinstall. CIFS seems to be being worked on quite heavily now so there’ll probably be quite a few tweaks and fixes released every few weeks.

Simon: It’s great except this shipment is early… the PSU isn’t scheduled to arrive until Monday. 🙁 I may have to take apart some boxes and reconfigure them with older less efficient power supplies so I can use the newer ones I have to run this board until the permanent PSU comes on Monday.

Everyone I talked to so far is amazed at the ram. I paid $200. I figured this was a wicked steal. As far as I could tell, it should be perfect with this mobo. We shall see. A lot of forums said this mobo was very picky about ram. So I was very careful in selection. The biggest complaint factor was about voltage. I made sure to get ram within the acceptable voltage range. Or at least what everyone was whining about.

You’re so flippant with the reinstall you’re scaring me. 🙂 I don’t plan to reinstall after 14 days. Granted, before I get everything configured the way I want to, I may be installing the latest build each week for a month. But, once everything works to my satisfaction, I will just let it run. I won’t be surprised with 300 day uptimes. 😉 I would just install Solaris 10 from Sun, but I think there’s a lot of stuff I’d be missing out on. I probably should check out LiveUpgrade and see what the requirements are going to be. Then I may get away with removing the DVD drive early. 🙂

When I get it up, I’ll test out the memory with mem test to verify it all works with no issue. Then I’ll install solaris. Hopefully, by that point, I’ll have 0 issues. I’m completely paranoid, and convinced, that I’ll be RMAing some random part. I was so paranoid that I played the try and buy program with Sun. But the box they sent just wasn’t worth the price tag compared to what I could build myself. Of course, this means there’s the potential I will have to RMA random parts and I may lose out if something falls beyond the 30 day return policy.

I’ll just hope for the best.

Yes, this will be installed on a gig switch. Only one other box on the network talks gig. But, that may change in the future. It would be nice to have file transfers at blazing speeds, but I’m sure the bottleneck is going to be the other computers read speed, not this (soon to be) box’s write speeds.

This sucks. I’m staring at parts on my desk which I cannot install in a box just yet. Oddly, staring harder doesn’t help. 🙂

Keep on posting here – I’m considering going with a setup similar to Simon’s and I find it helpful to follow along with your conversation.

@Rick: That’s a pity they didn’t send all the gear at once! If you’re keen to build this weekend, if you have a suitable PSU from another box, say 300W+, that might work out OK for now. In use you’ll be using around 120W idle and during heavy read/writes about 140-150W or so. On powerup you’ll need around 250W+ due to initial surge required.

You’re right, Solaris can run for years without crashing. I saw a thread on the OpenSolaris.org site asking for the longest uptimes – think I saw one around 4 or 5 years quoted and that was to change the batteries in the UPS! 🙂

Don’t worry too much about my comments on reinstalling — it was only because the JMicron JMB363 chipset was unsupported on the initial Nevada build I was running, and the driver was updated 4 build versions later, so I decided to upgrade, and then there was a bug in some handling of old file dates (1970) on some ancient photos I had, so I upgraded for that etc. There is a minor inconvenient bug in my current Nevada build 85 where on boot it sometimes fails to setup the shares for CIFS, and the fix is issuing a manual ‘sharemgr’ command, I think. So I would consider installing the latest SXCE which is build 86, unless people report trouble with it.

Don’t worry too much, I’ve built a few systems and never had any problem with RMA’s — perhaps I was just lucky?

Good luck and hear from you soon after you’ve issued a ‘zpool create megapool RAIDZ1 disk1 disk2 disk3 disk4’ (get the disk ids by typing ‘format’ first to show the disk ids). Then you can ‘zfs list’ and see 1.5TB of available redundant storage capacity 🙂

So, I started building with another PSU. 🙂 I couldn’t wait. However, I was greeted with a blank screen when I first booted. After freaking out a bit, and troubleshooting for hours, I found that I have one bad memory module. I’ll have to RMA 2 (they’re in a 2 pack). I am currently checking the other 3 modules with memtest to ensure there’s no issues with them. One is fine so far. I did not get ECC by the way.

Tomorrow I’ll send the memory back and I’ll start installing software. I will update here. I’ll add specs tomorrow.

@Rick: I thought you might start building it now 🙂 Pity about the bad RAM module, but 4GB will work well anyway. Good luck!

Simon: OK, the first load went well. I thought I may have downloaded the wrong version because the install iso’s grub asked me to install the “Developer” edition. However, once installed, uname -a results in the “Community” edition and the grub loader also says “Community” edition; I think I downloaded the correct ISO, at least a SXCE one, maybe not the most recent.

The temporary setup is:

SeaSonic SS-380GB (temporary until the Corsair arrives)

Asus M2N-E

AMD Athlon 64 X2 5000+ Brisbane black edition

Scythe Ninja

Asus EN6200LE

4GB Mushkin EM2 6400 (RMAing the second pack of 4GB)

WD 3200 IDE (320GB)

PlextorPlexwriter PX-716A

The M2N-E has issues with high voltage draw RAM. The mushkin I got is 1.8V. Most posts I’ve seen which say the M2N-E is a bad board is because they’re using RAM +1.9V. The mushkin is slow RAM compared to what you’d have in a gaming rig (5-5-5-18), but it works just fine in this instance. The Ninja fits nice and snug. At first, it looked like the last bank of RAM would not be removable once the Ninja was on, but that’s not the case. Everything fit fine. The left side does cover the heatsink from the heatpipe of the northbridge(?), but I don’t see any significant issues there. The CPU temp was 41C after SXCE installation, the chipset registered at 42C. I’m assuming that’s the northbridge. After installation of the 4 SATA drives, I’m sure the temperature will increase slightly, but I’m going to have a different case with more adequate cooling by that time.

Powered off draws 6 watts. I’m likely never to have this off. 🙂 As is, startup draws 110 watts peak. Running it pulls 99-101 watts. I’ll have to work on that. Hopefully with the GPU removed and the DVD removed it will consume less power. I still have 8 watts per drive to add also. Adding those 4 WD 500GB drives should add another 40 watts. At this point, my other box has my drives (complete with data!) still in them. Once I get the new box and OS tweaked, I’ll figure out how to temporarily move my data, then remove the drives and shove them in the new box and zfs them. Then I’ll copy on the data.

Bootup sucks. It took about 5 minutes for the initial boot. Subsequent boots appear to take 2-3 minutes. There were a few error messages that I’m looking into. Nothing big. I’m sure I can clear them all up. I’ve not been admining solaris in years, so I have some catching up to do. I need to find a way to monitor temperatures within solaris instead of relying on the BIOS. 🙂 I’ll be checking blastwave for packages I’m familiar with from linux.

Sitting looking at it, I’m really not sure if I have the correct version. Last night I tried checking for updates/patches. I got some random messages that I clicked through, a good reason not to build at 3AM. It appears there are no patches available. Maybe I do have the most recent version. Or, I just clicked through some important thing that now won’t let me update.

Quick update: I’m on my 5th install. I think I may have finally gotten it straight. 🙂 I have issues with my DVD drive. I may have physically damaged it. 🙁 Set swap to 2GB and / to the rest of disk. Looks like I have SXCE bn86 loaded. A few issues are annoying me that I am trying to figure out (while moving my data off my other box… so I can steal those drives for the ZFS volumes).

The bootup is not slow. It appears that my first install had some issues and that was causing the slow boot process. So did my 2nd through 4th. This install is better. But I may have hosed a few things due to the DVD install media/DVD drive. I’m going to grab down the ISO again and mount it locally. We’ll see if I can fix it by re-installing some components that appear to be giving me problems.

@Rick: Practice makes perfect 😛

It’s good practice anyway as it gets you used to a new OS and its quirks.

Which DVD reader did you use to install? My Samsung SH-D162C was OK to install but gave me some problems when reading back some media later, so it’s probably some driver issue.

If you have a 4GB USB memory stick search for someone’s blog who installed it from one. I think they tweaked the install so that the USB stick was mounted at /cdrom to fool the installer as some bits of the installer assume that it’s installing from CD-ROM / DVD install media, which works most of the time, but… 🙂

Which components give problems, as I’m using one build less than you (build 85) ?

DVD: PlextorPlexwriter PX-716A

There’s a number of things that were giving me problems. The last build I had there were random problems with the install. smc would fail to run, the updatemanger would fail to run, etc. Seeking out the answers to the error messages didn’t help much. The last straw was the issue with an error message stating “can’t set locale”. I tried numerous things to fix that issue. syslog would generate that message for a number of services and upon shutdown the console would generate 5 or 7 of those same messages.

In the end, that was it. I figured either my install disk is bunk, or there’s an issue with 86. So, I’m currently installing build 85. 🙂 We’ll see how that goes.

Fortunately, I have patience. And, having been away from solaris for a few years, I’m happy for the troubleshooting exposure I’m getting.

So, I finally got it all working. So far at least. It appears that the last issue I was having was covered here: http://www.opensolaris.org/jive/thread.jspa?threadID=57019&tstart=0

I have a fully functional SXCE 86. After purchasing a new DVD writer, everything is working normally and the install went fine. I’ve been annoyed by this http://www.opensolaris.org/jive/thread.jspa?threadID=57300&tstart=0 issue. But, aside from that things are looking good. I took the appropriate time to set up a filesystem, mirror, and share 465GB worth of HD space with zfs. I think that was about 3 seconds. 🙂 I have yet to verify SMB connectivity. I’ll get all that out of the way today. Even the onboard sound was recognized by the OS. Although, I’ve since turned it off in the BIOS since this will be a fileserver. It’s nice to know that all components on the board are supported.

@Rick: Well, after a few problems, it’s good to see that you got it running OK. I don’t recall seeing, or should I say hearing the second problem with the glitch on the IDE drive — I’ll listen a bit more carefully when I reboot.

You reminded me, I need to update the details in the Setup page regarding CIFS sharing setup, as there were a couple of additional items needed (changed from build 82 to build 85), so I’ll do that now while I remember… see: http://www.opensolaris.org/jive/thread.jspa?threadID=55981&tstart=45

And good to hear they got the sound driver fixed for the M2N-SLI Deluxe motherboard, although, like yourself, I have disabled sound in the BIOS.

Do you find the machine/case fairly quiet?

Simon: SMB links:

http://www.opensolaris.org/jive/thread.jspa?threadID=55981&tstart=45

http://docs.sun.com/app/docs/doc/820-2429/configureworkgroupmodetask?a=view

SMB is up and running great. I’m *so* happy now. 🙂 ZFS rules. 🙂

With just the IDE drive, it was deathly quiet. Seeks were incredibly loud because there was no background noise at all. The Scythe fan is silent. The Corsair fan in the PSU is also silent (props to http://silentpcreview.com, those guys are great!). Currently I have the WD 320GB drive and 2 WD 500GB drives installed. I can hear/feel the 2 500GB drives. It’s a low thrum sound and every once in a while I hear the telltale “seek” noises they make. However, the box is currently sitting open, on my desk, next to my monitor. I have a new case arriving sometime soon. Based upon the noise level now, I am assuming this thing is going to be very very quiet once it’s on the floor next to my desk.

With the case open, the thrum of the hard drives is just a bit louder than the laptop sitting next to it. But not by much.

Oh, and, this isn’t the M2N-SLI, this is the M2N-E. For fun, I’m going to enable the sound for a bit to test out the quality.

Even though this is supposed to be my fileserver, I’m currently downloading a bunch of stuff from blastwave to make it more like a desktop. 🙂

Oh, and, last but certainly not least: I’m pulling 112 watts at the wall with large file transfers taking place across the SMB share and downloads from blastwave installing. Idle I was getting 100 or so watts. I’m sure with tweaking the AMD black, I can get it under 100, even with the video card and DVD writer in.

@Rick: Glad to hear you’re pleased with ZFS 🙂 It really is superb!

Yes, should be quiet in the new case. Which one did you choose?

Sorry, yes, you got an M2N-E, not an M2N-SLI Deluxe, which explains why the sound works, as my backup server runs on an M2N-E too and I noticed that the sound worked on that one 😉

If you get it running headless, let me know what you did to get it to work, as I tried it once but it didn’t load the OS, didn’t get past POST, and as there was no graphics card I couldn’t see what was going on – ha ha 🙂

Simon: The case is a Lian Li PC-V1000 aluminum. It was basically someone’s cast-off. So I’m giving it a home. 🙂 When it arrives, I’ll try running headless.

I managed to get a number of things up and running that were important to me. And while playing around, I installed CentOS in a branded zone. I’m a bit disappointed. I feel like there was a lot of hype surrounding running linux in Solaris. It’s neat that you can, but I haven’t found any practical reason to do it just yet. I’m doing it just because you can. It’s kind of sucky that it’s really limited to the 2.4 kernel. The 2.6 support is being developed, and for that I’m excited, but, there are a bunch of issues that I’ve read about when attempting to install and use it, so I’ll stick with 2.4 and not worry about it just yet.

I am very happy that VirtualBox (even if it is beta) runs. That’s great! I was hoping I wouldn’t have to build another box to run my virtual machines. Now I can rebuild them with vbox directly on Solaris. That’s one thing that x86 has over Sparc. I don’t think you can run the vbox on Sparc at this time.

I was also happy to find a replacement for TVersity. One of the reasons I was going to have to run a virtual machine was to be able to have a WinXP box that ran TVersity. But, I found an article (http://blogs.sun.com/constantin/entry/mediatomb_on_solaris) on how to install MediaTomb. This covers my need to feed media to the PS3.

Now all I have to do is find a nice replacement for Conky while I use this thing as a desktop, even though it’s supposed to fill the role of my server. 🙂

Simon: I’ve built one of these myself using your experiences as guidence.

I’d like to remove the EIDE boot drive and instead, use another SATA drive connected to the JMicron controller as a boot drive.

Is this something you’ve tried? I can’t get Solaris to even recognize any drive I attach to the internal JMicron STAT port.

Hi Brian,

I’ve not tried booting off a SATA drive connected to the JMicron SATA connector, but I think it should work. To give yourself the best chance of making it work you could try getting the latest Solaris (build 87), although from memory the JMicron support was added to Solaris in build 82.

First, make sure you have the latest BIOS (1502), download it and unzip it onto a USB memory stick/thumbdrive. I just upgraded my M2N-SLI Deluxe’s motherboard with this today but I didn’t try the JMicron SATA for booting yet, as I just use an old 40GB EIDE drive for the Solaris boot disk. Once you have the latest BIOS installed, ensure the JMicron SATA port is enabled in the BIOS, and the drive is enabled in the Boot tab of the BIOS settings.

After upgrading the BIOS, if it doesn’t boot (like mine today!) then you just have to pull the power cord out, flip the CMOS battery out, move the CLRTC jumper from pins 1 and 2 to 2 and 3, wait 15 seconds, then move the jumper back to pins 1 and 2, push the battery back in, and power up. Then you’ll need to re-enter the BIOS settings, but it’s good practice 😉

Let me know if that works, as I’d be interested to know, as I was thinking of that possibility too.

Hi Simon,

I just ordered basically the same HW as you have specified in your blog post.

But one question, did the mobo come with more than one SATA cable, or do I need to buy more?

Will you (and anyone else who’s tried this setup out) be upgrading to the just-released OpenSolaris 2008.05 release?

Also, in your reasoning I never saw any consideration of a SPARC-based Solaris solution. Much like you most trust ZFS on Solaris, I most trust Solaris on SPARC (especially given that Sun almost pulled the plug on Solaris 10 x86 entirely, a couple of times). Was that simply never even a thought? 😉

Thanks Simon. I’ve done all that, still no joy.

Never mind booting. At this point I think I’d be happy if I could just use the port for another drive. But it doesn’t look like I will be able to. Let alone boot from it.

I have tried all settings on the controller. IDE, AHCI, & RAID. Using 3 different drives one from Seagate, 2 from Western Digital.

With IDE I there is nothing in the logs that indicate a problem. There is nothing in the logs indicating the drive was detected.

AHCI shows an error in the logs:

May 7 14:46:18 opensolaris ahci: [ID 808794 kern.info] NOTICE: hba AHCI version = 1.0

May 7 14:46:18 opensolaris pcplusmp: [ID 803547 kern.info] pcplusmp: pciclass,010601 (ahci) instance 0 vector 0xb ioapic 0x4 intin 0xb is bound to cpu 0

May 7 14:46:29 opensolaris ahci: [ID 632458 kern.warning] WARNING: ahci_port_reset: port 1 the device hardware has been initialized and the power-up diagnostics failed

Finally RAID. The Device Driver utility in 2008.5 shows no driver.

I have found some references that suggest it may be a problem with the JMicron controller and S.M.A.R.T. on the disk.

Oh well. I guess with 3 x 750GB + 3 x 250GB in disk space I won’t quibble about 1 SATA port. Admittedly, it would be nice to figure this one out though.

Thanks anyway. If you find anything out, please share.

Hi Grady,

All the SATA cables you need are in the motherboard box so you don’t need to buy any more.

@Riot: I haven’t investigated the 2008.05 release, but will take a look sometime. I have an idea it’s based on the Indiana project, but I might be wrong.

I used x86 hardware as I’m familiar with it and it’s cheap and readily available.

Hi Brian, well that sounds a pity and it seems you’ve tried a lot of things.

This log message sounds interesting:

May 7 14:46:29 opensolaris ahci: [ID 632458 kern.warning] WARNING: ahci_port_reset: port 1 the device hardware has been initialized and the power-up diagnostics failed

If it’s referring to the JMicron SATA port, it sounds like something’s being recognised, at least.

You could try disabling the SMART data monitoring and see if that works, as you mentioned there might be a conflict.

Finally, you could look on the forums at http://www.opensolaris.org/os/discussions/ and click on storage-discuss and ask in there about the JMicron.

Let me know what you find out!

Just a heads up, you can kitbash a bootable ISO onto a U3 compatible drive and forget your dodgy DVD-ROM drives!

http://blog.sllabs.com/2008/05/booting-heron-from-u3.html

Good luck, and I’m looking forward to tinkering with ZFS myself, thanks Simon!

Hi Kamilion, I liked your U3 post — I might give it a go with Solaris…

Good luck with ZFS, it’s really great.

I am thinking of building a system on the ASUS M2N-SLI Deluxe mobo, and I’m wondering… does Solaris or OpenSolaris have drivers for the on-board SATA controller(s)? Are all six SATA ports on one controller – or are there two seperate controllers – one for two ports and RAID controller for the remaining four? Has anyone plugged six SATA disks into this mobo and got Solaris or OpenSolaris to function with all six? Do they show up as IDE (c0d0s0) or SCSI (c0t0d0s0)?

The M2N-E looks to be the exact same mobo as the M2N-SLI Deluxe. If you lift the M2N-E model sticker on the mobo “M2N-SLI Deluxe” is silk-screened underneath! It looks like they just pulled some components (2nd gigE, fiber audio, 2nd x16 PCIe slot). Comments?

I built this rig for a home server and it’s rock solid running Solaris 10 U5 and SAMBA:

M/B: Supermicro H8SSL-i2

CPU: AMD Athlon 64 X2 5600+ dual-core 2.793GHz

MEM: 4GB (2x Kingston KVR800D2E5K2/2G 2GB Kit DDR2-800 PC2-6400 ECC)

I/O: one PCI-X 64-bit/133MHz slot, two PCI 32-bit/33MHz slots

VID: Integrated ATI ES1000 Graphics Controller (8MB)

DISK CONTROLLER: Supermicro AOC-SAT2-MV8 133MHz PCI-X SATA2 controller

DISKS: 6 x Seagate ST3500630AS SATA/300 500GB disks (7200 RPM, 16MB cache)

I was going to run SXDE 01/2008, but SXDE is dead now. SXCE is too bleeding edge as is OpenSolaris 2008.05 (using ZFS as root is very cool, but I want more stability for my NAS). I may run OS 2008.05 on the new box tho.

Configured 2 disks as root and mirror, 4 disks in a RAIDZ pool. Disks show up as SCSI (c0t0d0). Drives are currently factory jumpered for 150MBs. Haven’t tried un-jumpering them for 300MBs, but it probably wouldn’t make much difference. The OS notices tho:

May 19 18:47:52 toybox sata: [ID 514995 kern.info] SATA Gen1 signaling speed (1.5Gbps)

Hi, any reason there for choosing an SLI motherboard and an external graphic card instead of using a non-SLI board with onboard graphics? As you stated yourself, “we’re not playing high-speed video games on this machine”. And a 500W power supply seems a little bit oversized. This seems not really optimized for low power consumption.

I have a home server with Solaris Express build v67. I use this card SATA-2 card with 8 drive connections:

http://www.supermicro.com/products/accessories/addon/AoC-SAT2-MV8.cfm

Ive heard that it is the same chipset as in the SUN X4500 Thumper machine with 48 drives. The card gets detected automatically by Solaris, during install. The card is PCI-X (not PCI-express). PCI-X slot can only found on server mobos. If you plug this card into an ordinary PCI (which I have done), the transfer rate will drop.

A normal PCI at 32bit/33MHz has a peak transfer rate at about 133MB/sec. Which is not far from SATA II 150MB/sec, but it would be nice if I could get a full 150MB/sec – but it wont happen with this card, unless it is either 64bit or 66Mhz – then the transfer rate will increase to 267MB/sec

http://en.wikipedia.org/wiki/List_of_device_bandwidths

If used correctly as a PCI-X card, the max theoretical bandwidth with this card is 1.07GB/sec, which should suffice for most people’s need.

The manual claims the card is 64bit 133MHz PCI-X. http://www.supermicro.com/products/accessories/addon/AoC-SAT2-MV8.cfm

I go for an external SATA II card, that way I dont have to worry about picking the right non-server motherboard. If I want an external card, I have to resort to PCI or PCI-express on plain mobos. I dont know of SATA PCIe cards, so I guess the only alternative I have left is PCI cards. This card will follow me between my upgrades, from P4 now, to Penryn.

Everything works flawless with the card.

There is one problem with transfer rates: I have a P4@2.4GHz and 1GB RAM. I get like 20 MB/sec. That is due to the P4 being a 32 bit CPU. As ZFS is 128 bit, it doesnt like 32 bit. With 64 bit CPU you get transfer rates in excess of >100MB/sec. I will upgrade my Solaris machine to a Penryn Quad core. Nothing needs to be changed for ZFS running on 32 bit CPU, when upgrading to 64 bit CPU. Everything will function automatically.

I’ve heard that an old Opteron 940 mobo, often has PCI-X slots. And the CPU is dirt cheap. And the mobo to. And 3200 memory too. Add this AOC Micro card, and you got one hefty server capable of 1GB/sec transfer rates.

Regarding ECC Memory, Solaris has something called “self-healing” which means it is kind of ZFS-sque checksums for RAM. It means, RAM will be checked against errors. (I think, please google on self healing). With ECC, makes Solaris doubly secure against RAM failures.

Hi Mike, OpenSolaris does have some built-in driver support for onboard SATA controllers. For example, there is support for the NVidia SATA controllers in the Asus M2N-E and M2N-SLI Deluxe motherboards (MCP 55 chipset). One must verify compatibility within the OpenSolaris HCL (Hardware Compatibility List). OpenSolaris will support all six onboard SATA connectors for the two motherboards I have mentioned, for example, although so far, I have only plugged in four disks. The M2N-SLI Deluxe has an additional SATA chipset — the JMicron 363, which drives one additional onboard SATA connector, and one eSATA port on the I/O panel of the motherboard, but I have not tried this so far.

The drives show up as c0t0d0s0 etc.

The similarity between the Asus M2N-E and M2N-SLI Deluxe motherboards is interesting to know. Unless you need the eSATA, SLI, extra SATA port or extra gigabit ethernet connector and the additional items you mention, then the M2N-E looks to be a good board for AMD processors (AM2 socket).

I run with SXCE, as I want the ability to benefit from regular bug fixes, without having to pay $300+ annually that Sun wants to allow you to use the IPS pkg command to update your system packages, like the old ‘apt-get update’ used to work in Debian Linux.

Looks like you have some nice hardware components there — especially the Supermicro SATA card. I think I might use the same card one day when I build a new system.

Hi Peter, I chose the SLI board not for the SLI but because I liked the possibility of exploring the second gigabit ethernet connector, plus the eSATA and extra SATA port provided. If none of these items are of interest, then the Asus M2N-E is probably a good bet, and my backup server uses an M2N-E, and this and seems to work well. As you say, a 500W PSU is not required, and on my backup server 430W PSU works fine. After booting has completed, the system draws around 120W, but when booting, unless you can guarantee the drives use staggered spin-up, you will find the system uses 250+ Watts, so an additional buffer is advisable, as it’s best not to run near 100% capability.

Hi Kebabbert, there are published known-to-work motherboards on the OpenSolaris HCL, but you’re right — the SATA card you have chosen does use the same Marvell chipset that the Sun Fire X4500, aka Thumper, uses. It does seem a nice card, which I expect to use in a future system build — a lot of people have highly recommended that card.

ZFS works best with 64-bit processors, so although 32-bit processors work, they are far from optimal.

Fast read/write speeds are cool, as long as you have matching power to transfer the data across the network to connected machines. How fast is your network in real-life sustained large capacity transfers with the system you mention?

Using ECC makes a lot of sense on something like a storage server where you want data integrity.

I get like 10-20MB/sec all the time. From drive to drive, or to another computer. That sucks, but it is ok, as I prioritize safe storage above speed. When I upgrade to Penryn quad core, the speed will increase dramatically. However, I will be content with Penryn and ~120MB/sec for my ZFSraid. It is enough for me. No need for 600MB/sec with Sun X4500 Thumper.

My server is my workstation. To the server/workstation I will connect a 30″ monitor and a Nvidia GeForce. I will dual boot when playing Windows games. I will shut off the power to the ZFSraid most of the time, it will mainly be used for storage. Therefore, I will have a >250GB system drive that holds temporarily stuff: movies, MP3, etc. Every know and then, I will copy stuff from my ZFSraid to the system drive. As soon I have something valuable on the system drive, it will get copied to the ZFSraid. Later the system drive will be upgraded to SSD drive with 0.5 Watt. I dont need access to all my Movies and documents all the time, Ive found out. I just copy a boatload of things that I want, to the system drive and >250GB is enough for a long time, if you delete Movies, etc, regularly.

A standard hard drive uses ~10 Watt. My ZFSraid uses 40 Watt. With “zpool export” and “zpool import” I can turn off the power to the drives at will. I dont know if the SATA card supports hot swapping, but it doesnt matter. I just reboot. I dont know how I will turn off the power to the drives. I am thinking of cutting each power cables to the drives and connecting a power on/off switch. What makes most sound in a computer, is the hard drives, Ive found out.

A quad core Penryn 9450 use ~7 Watt in idle, going up to ~50 Watt under full load.

A GeForce Nvidia 9800 uses 200 Watt full load, and like 60 Watt idle. However, there are new chipsets from Intel soon, with similar functionality to AMD 780. These next generation chipsets allow to turn off power to the external graphic card, and only use the internal. This way, the Nvida 9800 is only used when playing games. If we look at AMD 780, it looks that 30″ monitors are not supported with the internal 780 graphic card. Neither is Windows XP allowed, only Vista is allowed. And Vista is less optimal. I hope Intel future chipsets will fix these two problems. I also wonder how long it will take before Solaris supports these two functions. But Nvidia has good Solaris support with new drivers all the time.

To the server I will connect my SunRays and place them throughout the building. (BTW, I am the one who told you about SunRays). The server will be in the basement. I will sit with the 30″ in the basement. When someone surf/MP3/Movie/works they can use the SunRays. The server just send bitmaps to the SunRays, that means using SunRays will not be any slower than if you used the Server directly. There will be no difference, except graphic bandwidth – movie playback. But compilations, computations, working, etc will not be any difference at all. The SunRay servers normally doesnt have any graphic card at all. It generates the bitmaps in RAM. BTW, VirtualBox 1.6 has just been released, allowing to run VB from within Zones in Solaris. These virtual zones are very lightweight, and I can give root access to a zone. Within a zone, no one can break out into the global zone. So, people can use Windows XP from SunRay. Or you can use Windows + Solaris + SunRay:

http://www.youtube.com/watch?v=BCNWB_slB-Y

So, I will have a 9450 quad core Penryn that uses ~10 Watt idle. 4-8GB RAM uses 10 Watt each memory stick. One 250GB System drive ~10 Watt idle. The ZFSraid is normally shut off. The graphic card is normally shut off too. And I will later change the 250GB system drive to SSD using 0.5 Watt. Each SunRay uses 4 Watt, I can have a boatload of them in a drawer. This is my master plan. Opinions? (Al Gores movie influenced me a lot. Save energy whenever you can!).

BTW, Correction: the AMD 940 mobos often has PCI-X slots, NOT the 939 mobos. So a cheap solution would be a 940 mobo + dual core opteron + AOC SATA 2 card PCI-X. If you can edit my earlier comment, please change 939 to 940?

Hi Kebabbert, 10-20 MBytes/sec doesn’t sound that fast. Is this read or write speed, and are you using a gigabit switch and NICs on machines connected to the switch? Also, which sharing mechanism are you using: CIFS or NFS?

The reason I ask is that when I was using a single gigabit ethernet connection between Mac Pro and the ZFS fileserver, using CIFS sharing I seem to recall getting speeds around 40 MBytes/sec, and when I pumped it up to using 2 gigabit NICs with Link Aggregation, I started getting transfer speeds around 80 MBytes/sec, and this is using the cheap Asus M2N-SLI Deluxe motherboard and its built-in six SATA connectors.

I like your interest in getting a low-power solution going. Using the hardware listed above in this article, my system draws around 120W. So far, I didn’t get success with low power modes, as the suspend (S3) seemed to work, but resume did not work and I did not have the required information to identify which driver was unable to resume correctly.

Also, I like the idea of using Solaris Zones to run Windows, and accessing the Windows session via a Sun Ray. At some point in the future I might try that out.

When you get your system running, it will be interesting to see your power usage readings.

I get 10-20MB/sec all the time, both read and write from my ZFSraid. I am told it is because I have a 32 bit CPU, P4@2.4GHz. ZFS is 128 bit and doesnt like 32 bit CPU. With 64 bit CPU I will get more than 100MB/sec.

OK, that would probably explain it. Let us know what speeds you get with the new processor and machine build.

Sure, will do. But that may take a long time, as there are no Penryn 9450 available yet. And soon Nehalem comes. Argh. What to do, what to do…

PS. If someone adds a vdev to grow your ZFSraid, make sure you dont type wrong. Someone typed wrong and added a single drive to a ZFS raid, and then he got new ZFS raid = old ZFS raid + 1 drive.

To experiment with ZFS, you can create UFS files and format them as ZFS. Then you can safely experiment with ZFS files, acting as file systems.

http://www.cuddletech.com/blog/pivot/entry.php?id=446

I’ve recently had a delivery, the same components as Simon has listed above and I’m just in the middle of getting it up and running. I’d be very interested to hear how (if) anyone has got this setup running headless as I get the same problem as Simon posted, doesn’t seem to get past POST but hard to tell without a GPU 😛

Other than that it all seems to be going well, just having a conversation on the osol-discuss mailing list about whether to stick with a nevada build or go over to the main opensolaris distro

Hi Matt, great choice of hardware you chose there 🙂

I read somewhere that non-server motherboards don’t allow successful POST running headless, but I didn’t verify that categorically is the case. One possibility to debug this problem could be to enable POST error logging, then remove video card and reset the machine. When it fails to POST, shutdown, add the video card, power up and inspect the BIOS error logs for any useful log info as to why it failed to POST. If you get anywhere with this, I’d love to hear your findings.

Regarding usage of Nevada or Indiana (aka 2008.05), see a comment I made on the OpenSolaris forum here:

http://opensolaris.org/jive/message.jspa?messageID=235112#235119

Basically, if you use Indiana/2008.05, unless you fork out the $320+ you won’t be able to use IPS to update your system (like the apt-get in Debian Linux), so for me, it is a bit useless. I would rather use Nevada for now and have freedom to update my system if I see a need to. On my todo list is to create a script that will recreate my environment on a new build of Nevada after a new version install.

Aaah, so thats why the Sun guys were so keen for me to switch and use 2008.x 😀 I’m happy just to stick with Nevada, and if something comes along that _really_ needs an update, I can download the latest ISO image and run the updater from that.

I would be interested in your setup script if thats in the public domain 🙂

I’ve decided just to shell out the £30 for a cheap PCI-E graphics card, I was desperate to get this server up and running, plus it never hurts to have graphics in place should something go horribly wrong down the line.

Thanks

Well, that could be the reason. I also would expect that they would like lots of new users to use 2008.05 so that any bugs can be identified. However, I think you have to have the paid support option of 2008.05 ($324 per annum?) to be be able to submit bug reports, so I don’t see how ‘free’ users of 2008.05 would be able to report any bugs anyway. I will write a post soon that will ask these kinds of questions and maybe it will get some comments regarding clarification of these kinds of issues. I can see how paying $324 might be OK for businesses, but for home users it doesn’t seem right to me. If you want to encourage adoption of an OS, the best way is to make it free for home users — like Linux.

Once I get time to get that setup script together, I will post it here.

Yes, having the graphics card is useful. Some apps don’t run headless anyway. And if you get a low-power, passively-cooled graphics card like the Asus EN6200LE (30 euros) then it uses very little power and makes zero noise.

Can’t you get a serial console working on your system?

I’ve run several x86 Linux headless systems with serial consoles, and I’ve sure used a lot of Solaris on SPARC systems where having a serial console is invaluable.

May you have the best of luck in reducing the power consumption of your file server!

Great write up 🙂

Thanks a lot! I didn’t try to get a serial console working yet. Were you using standard non-server motherboards (Asus home mobos etc), or were you using server motherboards when you got the serial console + headless systems booting? Yep, it will be good when the power saving works better using proper suspend and resume.

Hello Simon!

Meet another ZFS evangelist, this time from Italy!

It’s amazing to seehow our setups are similar, probably because of the hard thinking we both did before purchasing parts.

Same mobo & cpu… amazing, as I said.

I’m just sorry I discovered your blog after assembling my system, I would have spared myself a lot of brainstorming… 😉

I use 4 GB RAM and a 2,5” WD Scorpio, 160 GB for booting the system (SXCE 87 as of writing, but I’m planning to switch to build 91 ASAP), while my main array is a raidz1 pool composed of 8×500 GB WD AAKS.

$ drives are on the onboard controller, while the last 4 are on two PCI-E x1 controllers with Silicon Image 3132 chips.

3.1 TB net storage, not bad…

Lookee here:

# zpool status

pool: blackhole

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

blackhole ONLINE 0 0 0

raidz1 ONLINE 0 0 0

c1t1d0 ONLINE 0 0 0

c2t0d0 ONLINE 0 0 0

c2t1d0 ONLINE 0 0 0

c3t0d0 ONLINE 0 0 0

c4t0d0 ONLINE 0 0 0

c4t1d0 ONLINE 0 0 0

c5t0d0 ONLINE 0 0 0

c5t1d0 ONLINE 0 0 0

errors: No known data errors

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

blackhole 742G 2.38T 742G /blackhole

Just imagine migrating this thing to 8x1TB drives… just wait until these drop to 50 euros or so… mmm…

The beast draws about 160w with all disks on, and it dips to around 102w when the array goes idle (1 hour). I think I might shave another 20w or so by removing the video card and going headless.

Maybe I could get even lover by replacing one of the controllers with a port multiplier. Will lety you know when I do this.

About the onboard JMicron, I think at the moment it’s still not supported: I tried bot using if for boot and for hooking up a secondary drive, but had no luck at all. Maybe one day we’ll be able to use this lonely port, too… 😉

Speaking of problems, I experienced frequent lockups with the Gnome desktop. Switched to XFCE and the problems disappeared. Did you find similar problems?

Well, I’ve talked far too long.

Best wishes for the further developments of your rig, and I’ll wait to hearing from you soon!

You found a fellw reader for your blog. Who knows if we’ll meet one day…

I’ll be in London in late october with my wife, to attend to a concert at the KOKO. Who knows…

Forgot to ask one thing…

when I wake up my array from sleep, the disks come up one at a time, which means it takes about 30 secs to access the pool.

Does this happen to you too, and if yes, do you know how to deactivate this sort of “staggered spin up”?

Hi Paul, greetings to you in Italy!

Yes, it’s funny we chose similar hardware. Which make/model PCI Express SATA card did you use (Silicon Image 3132-based) ?

I like your pool name — blackhole 🙂 That sure is quite a beast with 8 drives! And you’re right — when these 1TB drives get a lot cheaper, it will be terrific.

I’d be interested to hear how your efforts to reduce power usage go. Yes, I heard other people mention that the JMicron SATA connector seems to be unsupported so far within OpenSolaris.

I didn’t have lockups with the Gnome desktop, but I had strange cases where lots of spurious mouse events would appear, causing the Gnome interface to be almost impossible to use, but I think this might be caused by the 2-port Linksys KVM switch that I’m using to switch between 2 Solaris boxes (fileserver and backup server).

Thanks for the best wishes with this setup — I’ve got quite a few more ZFS articles I plan to write when I get a bit more time…

Good luck with your system too!

Enjoy the music at KOKO — which band? Not sure yet of my plans for October.

Oh, I almost forgot. When I did use power management on the disks, I remember it did take a while (30 seconds sounds possible) for the data to come back online. I think you are right — there is a staggered spinup of the disks, so that each disk comes back online one at a time. Although this might be inconvenient, this is probably a good idea, as the disks consume a lot more power when they are spinning up, and if all eight drives, in your system, were to spinup at the same time, the power requirements might exceed the capabilities of the computer’s power supply, in some cases.

Paul Emical

If you are concerned about high power wattage, then maybe you could even things out by trying ultra thin clients SunRay as I have posted here on “Drive temps”? They each use 4 watt, and if you connect a few of those to the server, you can ditch all your other computers. Now you have your server using wattage, and your desktop computer using wattage, your wife’s computer uses power, etc, summing up to several 100 watt. If you try SunRay instead, then only your server uses power and you can have 10 users simultaneously, each consuming 10% of one server’s power.

For anyone that’s interested, it seems that the CPU I chose does not, and probably never will, support CPU frequency scaling via Solaris’s AMD PowerNow! support, which allows the processor to use less power when it is idle.

See the following article for more details: http://blogs.sun.com/mhaywood/entry/powernow_for_solaris

To support CPU frequency scaling with AMD processors, it seems the processor must be family 16 (10h) or greater, and of course, mine is family 15.

However, family 16 is the Barcelona processors, and these seem to use between 80 and 120 Watts, according to this page: http://www.theinquirer.net/en/inquirer/news/2007/09/10/average-power-consumption-is-anything-but

So my 45 Watts doesn’t sound too bad after all.

Here’s the diagnostics for my processor (AMD Athlon X2 BE-2350 45W TDP):

bash-3.2$ kstat -m cpu_info -i 0 -s implementation

module: cpu_info instance: 0

name: cpu_info0 class: misc

implementation x86 (chipid 0x0 AuthenticAMD 60FB2 family 15 model 107 step 2 clock 2110 MHz)

bash-3.2$ kstat -m cpu_info -i 0 -s supported_frequencies_Hz

module: cpu_info instance: 0

name: cpu_info0 class: misc

supported_frequencies_Hz 2109683672

As you can see, it only supports one frequency: 2.1 GHz.

Hello folks – just wanted to say a big thanks for the blog entry and discussion. I’ve been thinking about upgrading my home server for a while now but was concerned about SATA compatibility with opensolaris. My server finally gave up the ghost last weekend so I splashed out on a Asus M2N-SLi Deluxe with a new cpu/memory and 4 x SATA drives. I’ve configured the drives in a raidz across 2 onboard controllers and it works like a dream. When I restored my data I was hitting peak writes of 80MB/s but this is reading from a single IDE drive across Gigabit ethernet and compression is enabled on my pool. Compared to my old IDE setup though, this beast flies. Interestingly, when I was thrashing the pool, iostat would show the same %b and %w for each of the 4 sata drives which seems a bit odd. The numbers were low anyway which is the main thing 🙂 Anyway, the onboard ethernet works fine (nge driver) and I’m using a single ide drive as root and have a dvd-rom hanging off the channel as a slave. If the board had 2 IDE’s I would probably go for a mirror setup but I figure I’ll just cron a backup of my root to the zfs pool every night and so whenever I rebuild I’ll have a reference setup for my config files. I might make this a zfs bootable drive at some point as my last filesystem used to get smashed every time we had a power cut or the box paniced but that’s for another day.

The graphics card I went for was a GeForce 8400 GS 256MB DDR2 HDTV/DVI (PCI-Express). The cool thing about this is that it has a S-Video out so I can connect it up to my monitor and have the Solaris head as a Picture-In-Picture display overlaying my desktop.

Hi Simon,

Many thanks for all the great info on this series of posts. I just bought an Ultra 20 case off ebay for almost nothing and plan to fill it with this or a very similar mobo. Have you had a chance to test if the eSATA port works ok? It looks like the JMicron 363 chipset is supported from b82 onwards. Trying to hunt down a suitable cpu now…

Cheers z0mbix

Hi z0mbix,

Thanks for the compliments. Unfortunately, no I’ve not had a chance to try out the eSATA port on the back of this Asus M2N-SLI Deluxe motherboard. I’ve seen other people say that the SATA port mounted on the mobo inside the case that is also driven by the JMicron JMB363 chipset is not supported yet in OpenSolaris, although I’ve personally not tried to get it working yet, as with 6 SATA ports already available, I’ve not had a need to. If you do discover that the JMicron eSATA port is in fact supported, I’d appreciate it a lot if you could let me know. I did see a nice drive enclosure for a single SATA disk powered via an eSATA port: the Antec MX-1 which is only about 50 euros/dollars, excluding the drive. See here: http://www.silentpcreview.com/article728-page1.html

Good luck!

Simon

Here is another lengthy discussion of choosing motherboards for Solaris:

http://www.opensolaris.org/jive/thread.jspa?threadID=67341&tstart=30

Just wondering …

Is coupling a processor with a non-ECC onchip memory controller such as the Athlon X2 with two ECC DIMMs not just a complete waste ?

I thought only Opterons can use such ram.

Hi Julien, I just took a quick look at Opteron specs and it indeed seems like they have L1/L2/L3 cache ECC protection.

For the processor I have used (AMD Athlon X2), it appears that they do have on-die ECC protection: 64-Kbyte 2-Way Associative ECC-Protected L1 Data Caches and 16-Way Associative ECC-Protected L2 Caches. And the Athlon X2’s integrated memory controller for the RAM has ECC checking with double-bit detect and single-bit correct. See here for more info:

http://www.amd.com/us-en/assets/content_type/white_papers_and_tech_docs/43042.pdf

However, it seems that the Opterons have more comprehensive ECC protection for the L1/L2/L3 on-die caches than the Athlon processors. I suppose you get what you pay for, and I prefer to have some protection rather than none, which would be the case if I had used non-ECC RAM and a non ECC-capable processor.

For a business, the Opterons would be the best choice for the increased ECC protection. For home use though, Opterons and their associated server motherboards are too expensive, and the Opteron processors use a lot more electricity, and so cost more to run.

Simon, great work I appreciate much and following your blog I also started building a ZFS storage server.

Two remarks considering mobo and RAM:

The M2N-SLI is hard to get in the meantime, perhaps ASUS isn’t shipping it any more? Although I also picked the M2N-SLI a better alternative could be the M2N-LR. It’s on the HCL and has onboard VGA eliminating the need of an extra graphic adapter.

I also picked the same RAM (KVR800D2E5K2) but the 2G version for 8GB of system memory. But 4 sticks/8GB of these aren’t working on the M2N-SLI 🙁 – I have loads of errors with MemTest86 and I’m pretty sure it’s a general issue because I tested with two mobos and 8 sticks in total…

Two sticks / 4GB work flawless.

Kingston recommends KVR800D2E6K2 (note “E6” instead of “E5”) for the M2N-SLI so I will test these and report results here.

Thank you for sharing your experiences and keep up the good work!

Thanks Sebastian!

Yes, it could well be that the M2N-SLI Deluxe is coming to the end of its product life now.

It’s interesting you mention the M2N-LR, as I seem to remember that that was the first motherboard I identified as a possible candidate for building a ZFS fileserver. However, if I remember correctly, the dealer told me it only supported Opteron processors, and I considered these to be too power-hungry for what I wanted. It was also around twice the price of the M2N-SLI Deluxe, but you save on the graphics adapter, like you say. However, I just checked the specs again now, and it seems that the M2N-LR supports AMD Athlon 64 X2 processors as well as Opterons, and it’s the M2N-LR/SATA variant that only supports Opterons.

Pity to hear of the problems with 8GB RAM on the M2N-SLI. I also had some problems initially with 4GB RAM on the M2N-SLI Deluxe mobo until I upgraded to a newer BIOS and took out the CMOS battery and put it back again to clear the settings.

Are you using the M2N-SLI or the M2N-SLI Deluxe mobo?

Thanks again. I have a few more posts planned for when I get a little more time… you know how it goes 🙂

Simon,

I’m looking at buying:

ASUS M2N-SLI Deluxe AiLifestyle Series (repaired)

3x Seagate 1.5TB Barracuda 7200RPM SATA-300 32MB

2x Kingston ValueRAM 2GB KIT 240PIN DIMM DDR2 800MHz Ub ecc CL5

I already have a basic 120Gb IDE, an AMD Athlon64 x2 3500+ (AM2), an nVidia GeForce 5700 video card and case/power supply (I’m going to need to invest in a new power supply too).

Does this seem reasonable to you?

Hi Kevin, whilst not wanting to certify that it will all work, what I will say is that it certainly looks likely.

If possible, do a search on each component on the Sun OpenSolaris HCL (Hardware Compatibility List) — see here:

http://www.sun.com/bigadmin/hcl/

I have the same M2N-SLI Deluxe motherboard and I have not had any major issues with it (one of the two gigabit ethernet ports occasionally seem to fail to initialize on boot), and I have never tested the 7th onboard SATA port and the Firewire port, so if you absolutely need those then you’d best do a thorough search. Also, as of Nevada build 101 (SXCE), I have not yet been successful in getting the machine to suspend and resume, for power savings when idle. However, I have discovered that the problem appears to be related to the Nvidia 6200 (Asus EN6200LE) graphics driver not handling the suspend request correctly, but there is a fix for this which I will apply once it has been verified by other people to work well. Your graphics card might be different but I mention it in case it is a general issue.

Likewise, I’ve never used those big 1.5TB drives, so might be best to do a search as, strange as it sounds, I did hear of reports from forums of people having problems with the Samsung Spinpoint 1TB disks — some issues with compatibility between them and the Nvidia SATA chipset if I remember.

Again, on the Kingston site, check using their memory compatibility tool for compatibility with the Asus M2N-SLI Deluxe motherboard.

If you want to get a cheap and perfectly OK case and power supply, I can recommend the Antec NSK6580 as I used one myself for my ZFS backup machine using a bunch of different sized disks to form a large non-redundant pool to store backups on (zfs send/receive of snapshots to it across the network). That case includes a 430W power supply which is more than adequate.

Oops, just re-read and saw you already have a case & power supply, but I’ll leave my comment there in case it helps someone else.

Hope this helps.

Cheers,

Simon

Thanks Simon.

I’m not all that concerned about the suspend function I have to say. I intent this machine to run constantly in my attic. However, you mention the nVidia drivers; I attempted to install these (using the nvidia-config command line util) after a fresh installation of build 102 and I could not longer boot.

I haven’t been able to find info about nVidia chipsets and problems with large capacity drives. It should all arrive soon though, so I guess I’ll just have to test it.

The Kingston RAM actually has the ASUS M2N SLI Deluxe as the first board listed in a list of boards that it “is created for use in”.

If I get the whole system working I’m going to have to then get a new case and power supply I suspect, especially if I eventually wish to put in more drives.

Thanks and I’ll let you know how it goes,

Kev

Hi Kevin,

I think I read that the NVidia driver fix should be made available in build 104, which is available now. I’m interested in this for the S3 suspend feature, but you’re not interested in suspend.

I expect the 1.5TB drives will be fine, but I would still be interested to know how it goes with them.

Look forward to hearing how your new system works out. Good luck!

Simon

Okay Simon,

Here’s a serious question for you! I made a mistake thinking that my CPU was Socket AM2. In fact it’s a Socket 939 so now I have to buy a new CPU. Which should I buy? Seeing as the board supports Phenom AM2+ CPU’s I was thinking of getting a tri/quad core one, but would this benefit me in any way? Would the ZFS file server use 3 cores if I had them, and had compression turned on?

Kevin

Hi Kevin,

It’s hard to recommend a specific processor as I’m not familiar with them all. Also, I don’t know your expected usage pattern. If I assume that you’ll have mostly one user and reasonably light usage then something like the one I used (AMD Athlon X2 BE-2350) is perfectly adequate. However, my CPU doesn’t support CPU frequency scaling which can allow the processor to be throttled according to the load. This means that my processor is always using about the same power (45W) regardless of load, and that is not too good, as mostly the CPU is idle. Therefore, when you look for a processor it’s probably best to look for a processor that supports CPU frequency scaling to help reduce the power usage, and therefore electricity bill 🙂

Almost certainly, the AMD Phenom processors will be wasted on a home fileserver and will probably use more electricity than a lesser-powered processor with CPU frequency scaling. Take a look here for a comparison of the AMD chips, including an idea of how much power they use:

http://products.amd.com/en-us/DesktopCPUResult.aspx (click on the ‘wattage’ column to sort)

I don’t use compression, but it sounds quite likely that this would use more processor power. You’ll have to hunt around to see what others find useful and which processor they have chosen and why. I think the processor I used is getting harder to find now, but I saw someone using and recommending the AMD Athlon X2 4850e for their ZFS fileserver, which is also a socket AM2 45W TDP processor, and is also pretty cheap ($50 or so). It has a great customer rating at Newegg too:

http://www.newegg.com/Product/Product.aspx?Item=N82E16819103255&Tpk=4850e

If I was making a new fileserver, I would pay big attention to whether the processor supports CPU frequency scaling, as this appears to offer big cost savings on your electricity bill, as your fileserver will spend most of its life idle.

Let me know what you get and how well you rate it after use.

Cheers,

Simon

Thanks man. I decided, since neither the CPU nor the GeForce graphics card were supported by the new mobo, to just get a whole new PC. The old spare one I’ll sell and I’m going to sell my own desktop also, when ZFS is available properly in Ubuntu (and not through FUSE). I therefore somehow justified slashing out on a reduculous processor (Phenom x4 9950 black edition).

This will surely suck all the power out of the house and cost me million in the long run! I don’t know why I make these decisions! I may even put that in my desktop and put the Athlon64 x2 6000+ into the file server (as I believe that supports frequency scaling).

Anyway, a moment of madness but why not get the best eh? Also, the drives arrived and all three are the firmware and model number that is affected by the freezing problem, so I’m going to have to do a firmware upgrade on em.

Cheers,

Kev

Hi Kevin,

Wow, according to the AMD processor charts shown at http://products.amd.com/en-us/DesktopCPUResult.aspx, depending on which model of the AMD Phenom X4 9950 that you got, it is shown to consume either 125W or 140W.

Hopefully that’s only with a full load, otherwise that’s serious power usage for something that’s on 24/7. Hopefully it supports frequency scaling and will use much less power when idle.

Good luck, and be sure to warn the neighbours when you power up that beast 😉

Merry Christmas!

Simon

Hi Simon.

The M2N-SLI Deluxe is not as common as it was about 6 months ago so I had to look for something else that would be supported. I picked up an Asus M3A78-EM and I’m very happy with it so I thought I would share. It’s difficult to be sure that you’re getting a fully supported motherboard when you’re out shopping.

Anyway, the Asus M3A78-EM isn’t too expensive, supports AM2 and AM2+, I’m using 4 of the 5 SATA II ports, Gig Ethernet, integrated graphics etc.. all is good.

Hi Heath,

You’re right, the Asus M2N-SLI Deluxe motherboard does seem harder to find these days, so thanks a lot for posting the info regarding the Asus M3A78-EM motherboard. I took a quick look on the Sun OpenSolaris HCL to see if it was listed, and it was — see here:

http://www.sun.com/bigadmin/hcl/data/systems/details/18719.html

I will take a look at the specs on this motherboard now…

I’m glad it has integrated video, as it saves having to buy a graphics card 🙂

Good luck with your systems!

BTW, if you have a power meter, I would be interested in the power your system consumes.

Merry Christmas,

Simon

Hi Simon – concerning the compatibility of the Athlon X2 with ECC memory, I did not know – thank you for answering my (pointless) remark.

I bought all my own components for my home NAS (I invested some $$, as you can see):